Support Vector Machines

- 🖊 Multi-class SVMs

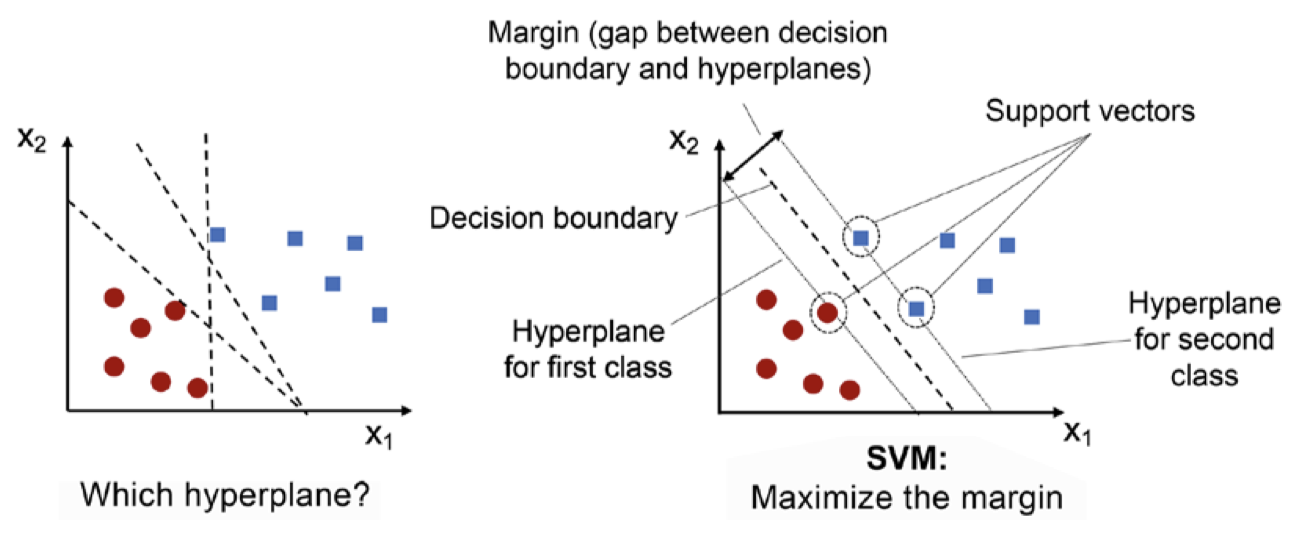

Intuition: The goal of support vector machines is to maximize a margin, defined as the distance between the separating hyperplane or decision boundary and training examples that are closest to this hyperplanes. These training examples mentioned are known as support vectors

Decision boundaries with large margins tend to have lower generalization error, and vice versa.

Decision boundaries with large margins tend to have lower generalization error, and vice versa.

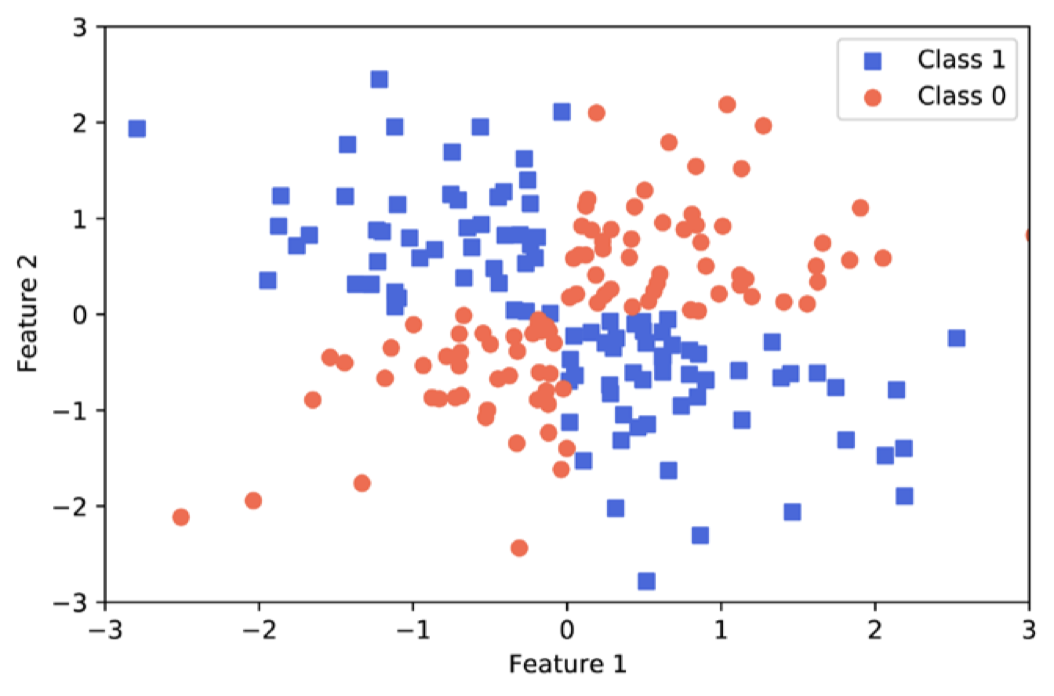

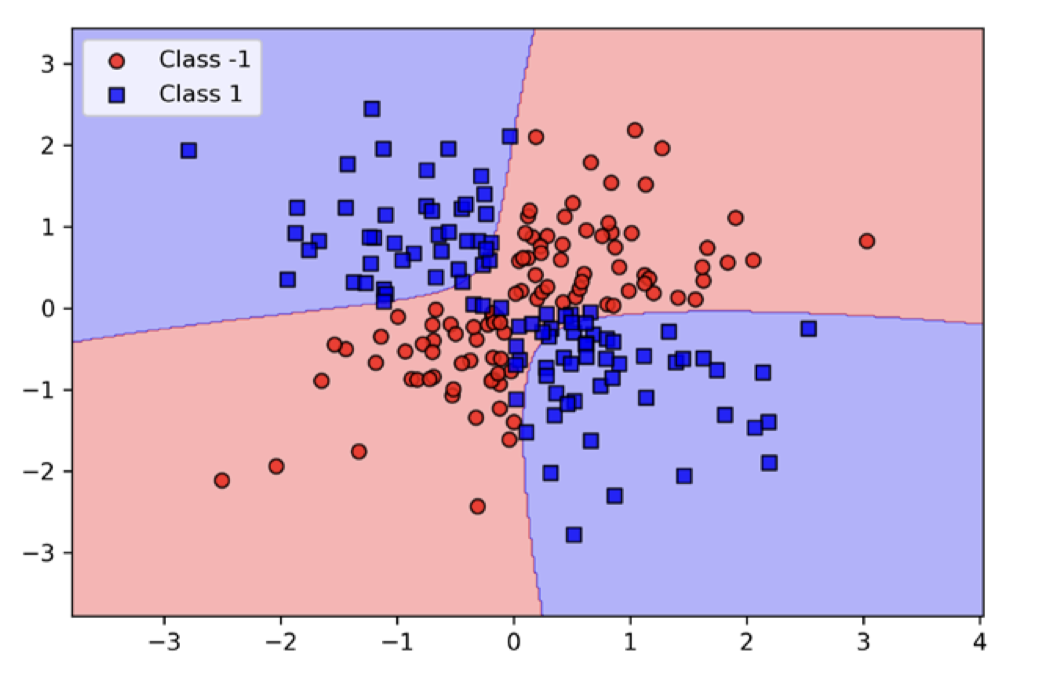

What is Kernel SVM? In order to solve non-linear classifications, we use kernel methods to create nonlinear combinations of the original features to project them onto a higher-dimensional space via a mapping function. In the higher dimension, the data may become linearly separable.

| Problem | Solution |

|---|---|

|  |

How is this done?

Common kernel functions include

- Linear

- Polynomial

- RBF

- Sigmoid

What are pros and cons of SVM?

Applications? Good for

- Image recognition

- Text category assignment

- Detecting spam

- Sentiment Analysis

- Gene Expression Classification

- Regression, Outlier Detection and Clustering.

Pros and Cons? Advantages

- Accurate in high-dimensional spaces

- Memory efficient Disadvantages

- Prone to overfitting

- No probability estimation

- Small datasets → not efficient computationally for a lot of rows